Guessing what users want is expensive. A/B testing for interactions transforms hunches into evidence, revealing which design decisions actually improve user experience and which fall flat. Whether you’re optimizing microinteractions, refining interaction patterns, or perfecting your conversion-focused design, split testing provides the data-informed UX decisions you need to move forward with confidence.

What Is A/B Testing for Interactions?

A/B testing for interactions compares two versions of an interface element to determine which performs better. Unlike traditional A/B testing that might compare entire page layouts, interaction testing focuses specifically on how users engage with dynamic interfaces, interaction states, and behavioral elements.

You might test different animation triggers, compare hover versus click interactions, or evaluate whether gestural interfaces improve task efficiency on mobile devices. The goal isn’t just measuring clicks—it’s understanding how interaction design choices impact user behavior analytics, engagement rate, and ultimately conversion.

The difference between regular A/B testing and interaction testing:

Traditional split tests often focus on static elements like headlines, images, or button colors. Interaction testing digs deeper into human-computer interaction, examining motion design, affordances and feedback, real-time interactions, and the entire UX design process. It’s about understanding not just what users click, but how they experience the journey between interactions.

Why A/B Testing Matters in Interaction Design

Your design team might love a smooth animation, but do users even notice it? That beautiful gestural interface might look impressive in prototype design, but does it actually reduce cognitive load or does it increase cognitive friction reduction challenges? A/B testing answers these questions with data rather than opinions.

A/B testing eliminates dangerous assumptions:

Even experienced user experience designers can’t predict every behavioral outcome. What works in usability testing with five participants might fail with thousands of real users. What succeeds on desktop might frustrate mobile users. Data-driven decision-making through A/B testing catches these discrepancies before they damage your engagement rate or conversion metrics.

Key benefits of interaction testing include:

- Objective validation – Remove subjective preferences from design decisions

- Risk reduction – Test changes before full rollout to avoid costly mistakes

- Continuous improvement – Small interaction pattern optimizations compound over time

- User-centered insights – Learn what actually works for your specific audience

- ROI justification – Prove the value of UX investments with concrete numbers

When you combine A/B testing with other research methods like eye-tracking studies, heatmaps and click tracking, and user journey mapping, you build a comprehensive picture of how design iteration impacts real behavior.

Setting Up Effective A/B Tests for Interactions

Start with clear hypotheses tied to interaction metrics:

Don’t test randomly. Every A/B test should address a specific question about user interface design or interaction context. For example: “Adding a loading animation will reduce perceived wait time and decrease bounce rate during data fetching” or “Replacing click interactions with hover previews will increase content exploration by 15%.”

Your hypothesis should connect interaction design choices to measurable outcomes. Whether you’re tracking task flows completion, time-on-task, error rates, or conversion, define success upfront.

Choose the right interaction elements to test:

The most impactful A/B tests focus on high-traffic interactions or bottlenecks in the user experience. Consider testing:

- Microinteractions – Button press animations, form field feedback, loading states

- Navigation patterns – Menu styles, interaction states (hover, tap, drag), breadcrumb designs

- Motion UI elements – Page transitions, scroll animations, entrance effects

- Call-to-action design – Button placement, size, animation triggers, visual hierarchy

- Form interactions – Field validation timing, auto-save indicators, progress displays

- Gestural interfaces – Swipe actions, drag-and-drop zones, multi-touch gestures

Sample size and statistical significance:

Don’t declare winners prematurely. A/B testing requires sufficient traffic for statistical confidence. Smaller differences need larger samples to validate. Most UX performance metrics benefit from at least 1,000 users per variation, though high-traffic sites can achieve significance faster.

Tools that calculate statistical significance automatically prevent false positives that lead to poor design decisions. Remember: a 5% improvement that isn’t statistically significant is just noise, not insight.

Best Practices for Testing Interactive Elements

Great user experience doesn’t happen by accident. It emerges from a structured UX design process that values both creativity and rigor.

Test one variable at a time:

When you change multiple interaction patterns simultaneously, you can’t determine which specific element drove results. Isolate variables to learn precise lessons. Test the animation trigger separately from the visual design. Evaluate motion design independently from color psychology changes.

This disciplined approach to design iteration builds knowledge systematically. Each test teaches you something specific about behavior-driven design for your audience.

Consider interaction context and device differences:

An interaction that works beautifully on desktop might fail on mobile. Touch targets that feel spacious on tablets might seem cramped on phones. Cross-device experience demands device-specific testing, not just responsive interactions that scale the same design everywhere.

Test gestural interfaces on actual touch devices, not mouse simulations. Verify that animation triggers perform smoothly on lower-powered devices where real-time interactions might stutter. Context matters as much as the design itself.

Don’t ignore qualitative feedback

Numbers tell you what happened; user comments explain why. Supplement A/B testing with usability testing sessions where you watch people interact with both variations. The combination of quantitative user behavior analytics and qualitative observation provides complete understanding.

Sometimes tests show surprising results—version B wins despite designer confidence in version A. Without qualitative feedback, you might implement the winner without understanding the underlying user needs driving that preference.

Test for the right duration:

Running tests too short captures anomalies rather than patterns. Running them too long wastes time when results are already clear. Most interaction tests need at least one full week to account for day-of-week behavioral variations. High-traffic sites might reach significance in days; smaller sites might need weeks.

Watch for external factors that skew results—marketing campaigns, seasonal shopping patterns, or technical issues that affect only one variation.

Common A/B Tests for Interaction Design

Button interaction states:

Test different hover effects, active states, and loading indicators. Does adding a subtle motion design element to buttons improve click-through rates? Do users prefer instant feedback or delayed confirmation? These microinteractions significantly impact perceived responsiveness and emotional design connection.

Form field interactions:

Forms are conversion bottlenecks where small interaction improvements drive big results. Test inline validation versus submit-time validation. Compare different error messaging styles. Evaluate whether showing password requirements reduces frustration and form abandonment.

Good affordances and feedback in forms reduce cognitive load and improve task efficiency. A/B testing reveals which feedback loop patterns work best for your users.

Navigation and menu interactions:

Test mega menus versus traditional dropdowns, hamburger menus versus visible navigation, or sticky headers versus static positioning. Navigation interaction patterns dramatically affect information architecture effectiveness and user journey success.

Consider testing different animation triggers for menu appearances. Do instant transitions feel more responsive, or do subtle animations provide better contextual cues about interface state changes?

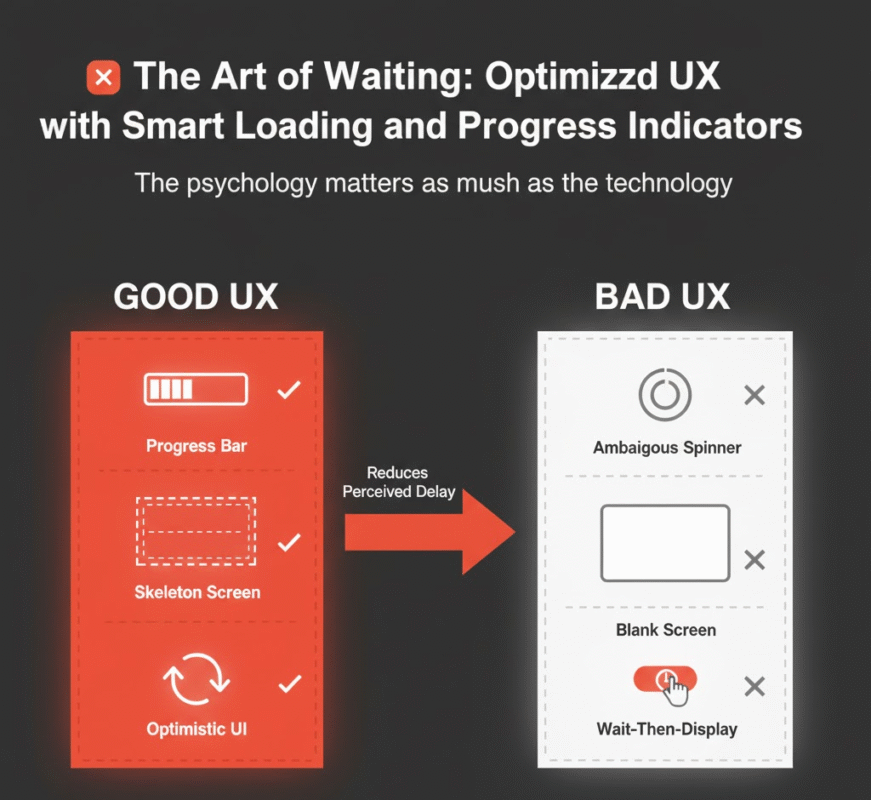

Loading and progress indicators:

Users hate waiting, but well-designed loading states reduce perceived delay. Test skeleton screens versus spinners, progress bars versus ambiguous loading animations, or optimistic UI updates versus wait-then-display patterns.

These dynamic interfaces choices affect how users perceive UX performance metrics even when actual load times remain identical. The psychology matters as much as the technology.

Content interaction patterns:

Should preview text expand inline or navigate to new pages? Do image carousels increase engagement or decrease it? Should related content appear on hover or require clicks? These decisions about interaction context shape how users explore your content.

Test variations to understand predictive user behavior for your specific audience and content types. What works for ecommerce might fail for news sites.

Analyzing Results and Making Decisions

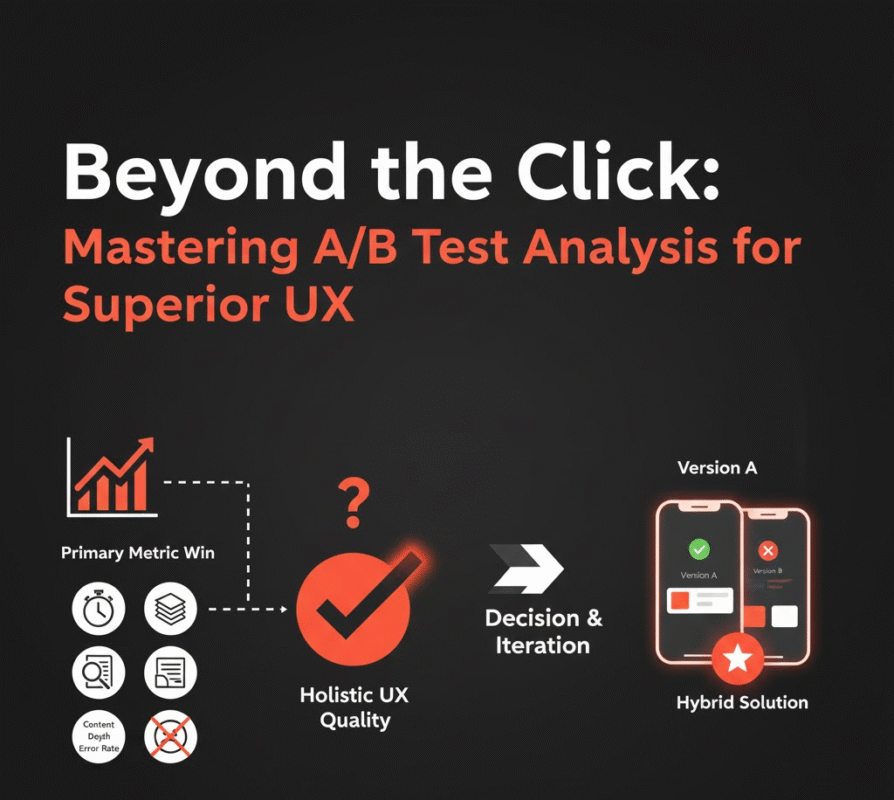

Look beyond primary conversion metrics:

A test might improve click-through rate while harming overall engagement rate or satisfaction. Examine multiple interaction metrics to avoid optimizing one metric at the expense of user experience quality. Check:

- Primary goal conversion (purchases, signups, downloads)

- Engagement indicators (time on site, pages per session, return visits)

- Interaction depth (scroll depth, feature usage, content exploration)

- Error rates and recovery success

- Cognitive friction indicators (back button usage, rage clicks, quick exits)

Sometimes the losing variation offers insights worth preserving. Perhaps users loved one element but disliked another. Hybrid solutions that combine the best of both variations often outperform either pure option.

Document learnings for future design systems:

Every A/B test teaches lessons applicable beyond the specific test. If users prefer subtle animations over bold ones, that preference might extend to other microinteractions. If immediate feedback outperforms delayed feedback in forms, apply that principle throughout your component-based UI library.

Build a knowledge base of proven interaction patterns that inform design consistency across products. This transforms isolated tests into strategic design to code guidelines that improve prototype-to-development handoff efficiency.

When to override test results:

Data-informed UX decisions doesn’t mean data-dictated UX decisions. Sometimes short-term metrics conflict with long-term strategy. A dark pattern might boost immediate conversion while damaging trust and retention. Respect human-centered design principles even when tests suggest otherwise.

Consider accessibility standards impact. If the winning variation creates WCAG violations or excludes users with disabilities, the apparent victory isn’t worth the ethical cost. Goal-oriented design balances multiple objectives, not just conversion optimization.

Tools and Technologies for Interaction Testing

Popular A/B testing platforms:

Modern testing platforms make interaction testing accessible without extensive front-end engineering knowledge. Tools like Google Optimize, Optimizely, VWO, and Adobe Target support testing dynamic content, animations, and interactive elements beyond simple layout changes.

For more complex interactive prototyping needs, consider dedicated interaction design tools that integrate with testing platforms. Framer, Principle, and ProtoPie allow sophisticated motion design testing before development commitment.

Integration with analytics and behavior tracking:

Connect your A/B testing with comprehensive user behavior analytics tools. Combine test results with heatmaps and click tracking from Hotjar or Crazy Egg, session recordings from FullStory or LogRocket, and conversion funnel analysis from Mixpanel or Amplitude.

This integrated approach reveals not just which variation won, but how users actually interacted with each option—where they hesitated, what confused them, and what delighted them.

Custom testing for specialized interactions:

Sometimes off-the-shelf tools can’t test the specific interaction states or animation triggers you need to validate. In these cases, custom implementations using feature flags and analytics events give you flexibility to test unique gestural interfaces or complex real-time interactions.

Work closely with your front-end engineering team to instrument custom events that capture the interaction metrics most relevant to your design systems.

Integrating A/B Testing into Your UX Design Process

Test throughout the design lifecycle:

Don’t wait for launch to start testing. Use interactive prototyping tools to test concepts early. Conduct usability testing on high-fidelity prototypes. Run A/B tests in staging environments before production rollout. Continue testing post-launch as you iterate based on user feedback and changing user needs.

This continuous feedback loop between wireframing, prototype design, testing, and design iteration creates products that genuinely serve users rather than just reflecting designer preferences.

Build a testing culture:

A/B testing works best when everyone—designers, developers, product managers, and marketers—values data over opinions. Celebrate learning from failed tests as much as successful ones. Share insights across teams to build organizational knowledge about what works.

Make testing part of every significant interaction pattern decision. Over time, your team develops intuition backed by evidence, improving first-draft quality while maintaining humility about always needing validation.

Balance testing with innovation:

Rigorous A/B testing can lead to incrementalism—small optimizations that never risk bold changes. Don’t let testing paralysis prevent experimentation. Reserve some design effort for untested innovations, especially in principles of interaction design that might redefine user expectations rather than optimize existing patterns.

The best user experience developers combine data-driven refinement with creative vision that sometimes challenges what testing suggests.

The Future of Interaction Testing

As AI and machine learning advance, interaction testing evolves beyond simple A/B comparisons. Multivariate testing examines multiple variables simultaneously. Personalization engines adapt interactions to individual users based on behavior. Predictive user behavior models suggest optimal designs before testing begins.

Yet the fundamentals remain: understand your users, form clear hypotheses, test rigorously, and let evidence guide design decisions. Whether testing visual cues, motion UI, or complex gestural interfaces, the goal stays constant—create touchpoint analysis that reveals what actually improves user experience versus what merely looks impressive in presentations.

Start Testing Smarter Interactions Today

A/B testing for interactions transforms user interface design from art to science without sacrificing creativity. By validating assumptions, measuring impact, and learning from real user behavior, you create conversion-focused design that serves both business goals and user needs.

Ready to optimize your interactions? Explore our guides on usability testing, UX performance metrics, and behavior-driven design to build a comprehensive testing strategy.